I wrote this post for lecture 17 in Andrew Ng's lecture collections on Machine Learning. In my previous post, we discussed Markov Decision Process (MDP) in its simplest form, where the set of states and the set of actions are both finite. But in real world application, states and actions can be infinite and even continuous. For example, if we want to model states of a self-driving car in a 2D plane, we must at least have the position \((x, y)\), the direction \(\theta\) of the car pointing to, its velocity \((v_x, v_y)\) and the rate \(r\) of change in \(\theta\). So the states of a car is at least a 6 dimensional space. For actions of a car, we can control how fast it goes in direction \(\theta\) and we can also control \(r\). Thus the actions have dimension 2.

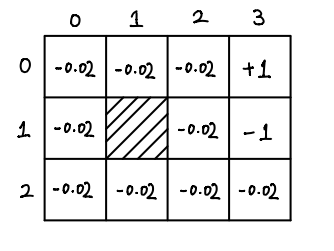

In this post, we consider only continuous states with finite actions. Indeed, actions space usually has much lower dimension than states space, so in case of continuous actions, we might just discretize the actions spaces to get a finite set of representatives of actions. One may argue that we can also discretize the states space. Yes, we can do it, but only when the dimension \(n\) of state space is small enough: if we discretize each dimension into \(k\) parts, then there would be \(k^n\) many states. If \(n\) is large, then \(k^n\) is not feasible. This is so called the curse of dimensionality. Moreover, discretizing states space usually results in lack of smoothness.